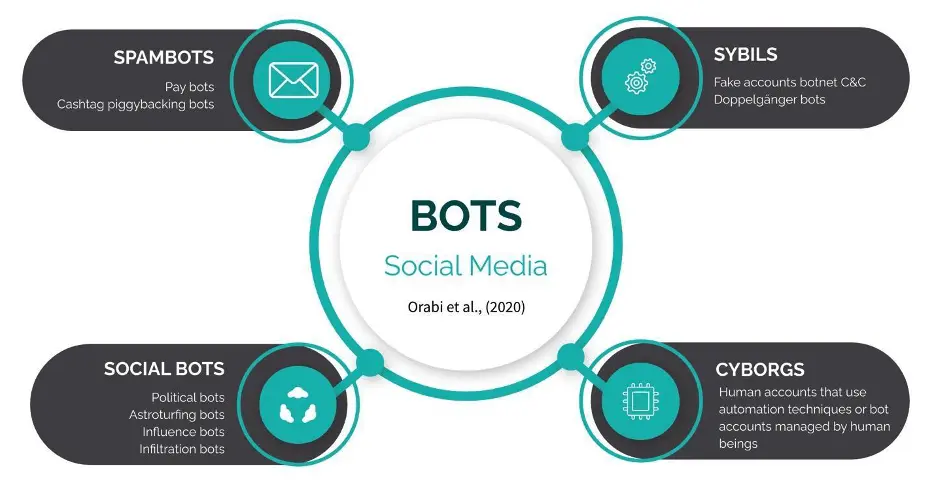

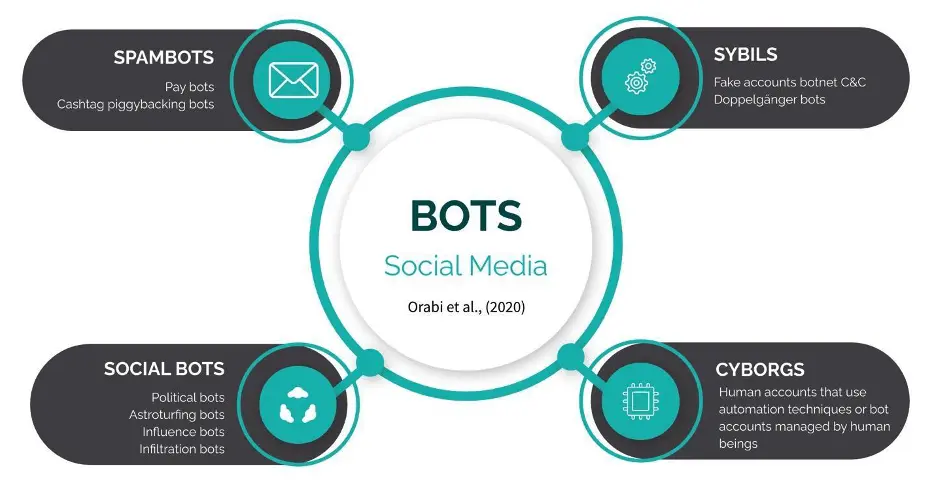

Figure 1. Bots in social media. Source: Own elaboration from Orabi et al. (2020)

The section delves into the knowledge of technologies that, such as deepfakes, bots and swarms of bots, are used to amplify the effects of disinformation on social networks. It explains how these technologies operate and the effects they have on the propagation of disinformation, but also on misinformation and the manipulation of information. The different types of bots in social media are defined and classified and the function of each type is detailed. From this, some strategies aimed at combating its effects are indicated, including the automatic detection of these systems. In addition, it explains how the deepfakes and forgery’s function, and how to counter it, and examples of online applications are given that allow them to be generated easily. Finally, given the concern about the malicious use of deepfakes, it analyses of automatic detection methods.

Main research questions addressed

● What role does technology play in the spread of misinformation and the manipulation of information?

● How bots, chatbots and trolls have been using in information influence campaigns?

● What strategies can be used to combat the effects of bots and automatic detection systems?

● How function the deepfakes and forgeries, and how counter it?

Social media are one of the most obvious manifestations of the technological revolution. They have contributed decisively to the current paradigm shift in communication, leading to the loss of the information monopoly held by traditional media, these are newspapers, press and television, and giving way to a kind of model of journalism 2.0, where each citizen stands as a potential producer and disseminator of content. Compared to the unidirectional traditional information model, where the consumer assumes the exclusive role of receiver, a bidirectional and plural model is now established. Within this model, social networks become the preferred channel of information consumption. This situation led to a darkening of the information activity. The intrinsic characteristics of social networks and instant messaging platforms, as well as the preferential use of smartphones to access the Internet, opened the door to information influence operations by hostile actors.

The immediacy in the distribution of content, together with very high production, both made possible by social networks, increase the difficulties of consumers to discern the veracity, credibility, and reliability of these, as well as the intentionality of the actors who manufacture and disseminate the information. In this context, the demand for responsible consumption of information by the public receiving the messages is outlined, but also the involvement of social media platforms to combat the dissemination of disinformation. On the part of the public, this responsible consumption should be based on a critical approach both to the contents and its verification, but also to the analysis of arguments, and the accounts from which they are distributed. Technology platforms must participate by identifying disinformation content, but also by preemptively detecting those accounts that may be potentially malicious.

The role of AI in this context is central, and can also be attributed to an ambivalent character, supporting the generation of content that serves hostile actors such as Deep fakes, or its dissemination through bots or chatbots, the selection of content according to its prediction of viralization, but it also plays an essential role in combating disinformation and its effects, detecting content, as well as potentially malicious social media accounts.

The use of social media bots (SMB) together with the so-called trolls as amplifiers of informative influence campaigns in different contexts (political, health, climate change, etc.) has been the object of attention by researchers in the field of communication and computer science (Ferrara et al., 2016; Varol et al., 2017).

Social media bots (SMBs) can be defined as automated or semi-automated accounts that mimic human behaviour and interact with other accounts, (Abokhodair et al., 2015). These accounts generally belong to a coordinated net (botnet) and are directed by a Master Bot. On the other hand, trolls are accounts managed by a user who serves the interests of a specific actor. The interaction between troll accounts and bots in informational influence and disinformation actions and campaigns is widely documented (Broniatowski et al., 2020; Badawy et al., 2018).

Among the events that have been the focus of researchers involving large-scale social media bots are the 2016 US presidential campaign (Luceri et al., 2020; Badawy et. al, 2018; 2019; Bessy & Ferrara, 2016), the anti-vaccine debates of the period 2014-2018 (Broniatowsaki et al. 2018), the French presidential elections of 2017 (Ferrara, 2017), the Catalan referendum for independence (2017) (Stella et al., 2018), Brexit (Bastos and Mercea, 2018) or the Covid-19 infodemic (Uyheng et al., 2020).

Subrahmanian et al. have shown that social bots represent an important percentage of the total number of the Twitter social accounts. Between a 5 and 9%, according a 2016 study (2016), which produced the 24% of the total tweets (Morstatter et al., 2016), in turn, a 2022 research conducted by the Israeli cybersecurity company CHEQ showed that from the 5.21 million website visits analysed that came from Twitter, a 11.71% were from bot accounts.

According to their characteristics, there is a wide spectrum of social media bots, from those that automate simple tasks (e.g., sharing or liking), other hybrids directed by humans but that have automated tasks to acting agents equipped with artificial intelligence (Assenmacher et al., 2020) and include machine learning (ML) and Natural Language Processing (NPL) algorithms. Automated accounts that participate in informational influence or disinformation campaigns only constitute a limited percentage of the total set of bots operating on social networks, nor are all targeted for malicious purposes (different types of spam, abuse of credentials, scam, fraud, pornography, e.g.,) (Adewole et. al., 2016). Orabi et al., (2020) applied the following classification for bots in social media:

Figure 1. Bots in social media. Source: Own elaboration from Orabi et al. (2020)

Also noteworthy are chatbots, which allow interaction with users, and especially the so-called Conversational AI, -state-of-the-art chatbots - that also incorporate Natural language understanding (NLU), Machine learning, Deep learning, and predictive analytics which allows them to make decisions, imitating human behaviour (Nuacem AI, 2021). A good example is ChatGPT developed y Open AI and based on GPT-3 algorithm, which understands human discourse, is multilingual, doubt premises, refuse inappropriate offers, and can create texts following instructions such as poems, legal texts, games, etc. Its potential to produce disinformation, reducing the work necessary to write it, while expanding its reach and effectiveness by focusing on specific targets, has been reported by experts from Georgetown University’s Center for Security and Emerging Technology. Another example is Replika a chatbot whose aim is to become the best friend of the users learning from their inputs. The application can lead to a self-isolation of the individual and the reaffirmation of one's own thought even if it is consistent with violence, hate speech or conspiracy theories.

The risks associated with these technologies related to the automation of tasks and their mimicry with human behaviour are also affected by different factors that hinder the investigation of information influence campaigns, as well as the effectiveness of the countermeasures launched. These factors include the confluence of domestic and foreign actors in disinformation campaigns, the offer of influence services through bots by private companies, as well as the "conflict over distinguishing harmful disinformation and protected speech" (Sedova et al., 2021). The difficulties derived from the use of proxies, like local actors, generally at the service of the interests of foreign state actors. Disinformation campaigns, together with the use of social bots and the tendency to work on different social media platforms to reach wider audiences, make it extremely difficult to trace the origin source of the information and to be able to establish the attribution of authorships.

On the other hand, the democratization of disinformation thanks to companies that provide influence services based on the use of bots, has led to its identification being essential, being an important niche for researchers and companies as a line of business given the rapid evolution of AI technologies and their use by hostile actors.

Tackling disinformation from preventive approaches is the main challenge to combat it. The development of newly created social media bot account detection systems, even before they start posting, is essential in identifying disinformation campaigns in their latent phase (Arcos & Arribas 2023).

Different approaches have been valued and implemented to combat the effects of social media bots. Orabi et al. (2020), conducted a systematic literature analysis on strategies for bot detection in social media. The authors also collect some references to works in which other ways of combating them are addressed. Thus, Almerekhi & Elsayed (2015) are oriented more toward the automatic classification of posts rather than to the detection of accounts. Other authors focus on identifying especially vulnerable groups or groups in order to develop defensive strategies (Halawa et al., 2016).

Regarding strategies for the detection of bots, researchers and analysts have explored different approaches. A first trying at classification was carried out by Ferrara et al. (2016) and distinguished between graph-based, feature-based, crowdsourcing-based and combined approaches. Later, the taxonomy was widened with additional subcategories by Adewole et al., (2017).

Van Der Walt and Eloff (2018) also contributed to summarize the different approaches found in the literature on the detection of bots. They identified different key features to explore fake content linked to the social media account; analysis of the profiles; activity features such as the timeframe between account creation and first post; the time between posts; stance and sentiment towards topic issues, and relationship with similar accounts or target accounts.

From all previous classifications, Orabi et al. (2020) propose a taxonomy guided by the methods employed:

● Graph-based

● Machine learning (ML)

o supervised: based content, based behaviour

o semi supervised

o unsupervised: based content, based behaviour

● Crowdsourcing based

● Anomaly based: action-based, interaction-based

Following the Orabi et al., taxonomy together with the later contribution by Ilias & Roussaki (2020), the below models are identified.

Graph based: A graph G is a set of nodes V (G) in a plane and a set of lines (links) E (G) of a curve, each of which either joints two points or joins to itself (West, 1996). These structures allow to representation of the relationships that occur between the different accounts of social media, being possible to identify the botnets. Among the works based on this model, we found (Boshmaf et al., 2016), focused on the detection of profiles more vulnerable to the influence of these bot accounts stand out. The system is based on the attribution of higher weights to those accounts that are real compared to fake.

Machine Learning (ML): Defined as a field of study that allows computers to learn without the need to be programmed. This learning, in the case of bot detection, can be supervised (a classifier learns how to identify accounts, based on labels), semi-supervised (it uses partially labelled data) or unsupervised (the algorithm by itself clusters the input data, labels are not necessary). Within these types, two subcategories have been identified: based behaviour and based content.

An example of supervised learning based on behaviour is BotorNoT (Davis, Varol, Ferrara, Flammini, & Menczer, 2016) a system that determines -from more than one thousand different features - the likelihood that a given account is a bot. It is available for public use through a website.

Cresci et. al., (2016, 2017) developed the digital DNA technique to analyze the collective behavior of social network users to detect botnets. This technique is based on “encoding the behaviour of an account as a sequence of bases, represented using a predefined set of the alphabet of finite cardinality consisting of letters such as A, C, G and T” (Orabi, p. 8). Within this set also can be highlighted the Convolutional Neural Networks (CNNs) detect spam on Twitter both at the account and tweet level (Ilsa & Roussaki, 2020).

Among the unsupervised systems based on the identification of bots based on content, we find models that are based on the search for groups of accounts that distribute the same URL (malicious), or accounts that hijack hashtags such as the DeBot tool (Chavoshi, Hamooni, Mueen, 2016b). Also, noteworthy in this group is the BotCamp tool developed by Abu-El-Rub & Mueen (2019) oriented to political conversation, which uses Debot combined with graph-based methods to model topographical information and cluster the collected bots, and a supervised model to classify user's interactions (agreement or disagreement with a certain sentiment) (Orabi, 12).

As semi-supervised systems can be highlighted clickstream sequenced and semi structured clustering (Orabi, 12)

Crowdsourcing: Includes manual identification of botnets and collect labelled datasets.

Anomaly-based: Models that detect anomalies in the interaction and activity of accounts based on the assumption that legitimate OSN users would have no motive to engage in some odd behaviours, as it will not reward them in any aspect.

Inspiring by the previous works above-mentioned, Ilias et al., (2021) conduct an experiment applying NLP and deep neural networks on 70 different features (e.g., the time between posts and retweets, length of username and profiles, following rate, uppercase and elongated word rate, tweets per day, reputation ratio, number of different sources used, the sentiment of the contents, the time between replies, age of the account...) to different datasets. The interest of this work was to determine the best selection of features through a logistic regression model. These features included: size after compression type, maximum number or mentions in a tweet, size after compression content, unique words unigrams per tweet, retweets (tweet), unique mentions ratio, the distance between username-screen name, average mention per tweet, number of mentions per word, the maximum number of URLs in a tweet...

Deepfakes and forgeries constitutes a challenge for homeland security due these are being used for malicious purposes by hostile actors. Adversary states and motivated political individuals can be served by these technologies to erode public trust in institutions and democracy, realizing fake images or videos where public figures make inappropriate comments or behaviours or share disinformation.

The effects triggered by deepfakes are highly concerning because of their realistic results, are rapidly created, and are cheap due to the freely available software and the use of cloud computing to get processing power.

Although the manipulation of images is distant in time, already in Stalin's time those members of the nomenklatura who had fallen out of favour were erased from the photographic archives, and the current deepfakes models date from 2017. Soon, its potential for manipulation in different contexts was noticed. In 2018, the Center for New American Security (CNAS) published a report warning about the risks of deepfakes for political manipulation and established a five-year deadline for its improvement, at that time it would be impossible to differentiate real images from deepfakes. In early 2022 an experiment conducted by Nightingale and Farid in which 315 volunteers were asked to discriminate fake face images from those that looked real, concluded that humans are not able to identify deepfakes, offering the results achieved an average accuracy of 48.2%. In addition, the authors asked participants to score the images according to the confidence they conveyed to them. The results were disturbing, as artificially generated faces appeared more reliable than real faces because they tended to look more like average faces which also generate more confidence.

Examples of employment of deep fakes can be found in the Ukraine War. Both Ukranian and Russian sides released videos compromising Putin and Zelensky with false declarations.

Deepfakes (stemming from “deep learning” and “fake”), a term that first emerged in 2017, describe the realistic photos, audio, video, and other forgeries generated with artificial intelligence (AI) technologies.

Tolosana et al., (2020) categorized deepfakes (audio non-included) into the following categories according to their format and kind of manipulation:

● Entire Face Synthesis: It refers to entire non-existence images artificially created.

● Identity Swap: Consists of replacing the face of a person in a video

● Attribute Manipulation: It refers to the manipulation of a certain attribute into images (e.g., age, hair or eyes colour, gender…).

● Expression Swap: this kind includes those images or videos where facial expression has been modified.

Nguyen et al., (2022) used two different categories referred to manipulated videos according to AI algorithms:

● Lip-sync deepfakes refer to videos that are modified to make the mouth movements consistent with an audio recording.

● Puppet-master deepfakes category refers to videos of a target person (puppet), who is animated following the facial expression and movements of another person (master). (p.1)

Deepfakes are based on Machine Learning models, especially in GANs (Generative Adversarial Networks) which work with two different neural network models that are trained in competition with each other. The first one, the generator, is tasked with creating counterfeit data (photos, audio recordings, or video) that replicate the properties of the original data set. The second network, or the discriminator, is trained to identify the counterfeit data. From the results of each iteration between both networks, the generator adjusts to create increasingly realistic data and will go on -thousands or millions of iterations—until the discriminator can no longer distinguish between real and counterfeit data.

Some examples of software that generate deepfakes are:

● Which face is real? A GAN-based tool to generate entire non-existence faces.

● FaceSwap: A free tool that used autoencoder-pairing structure a kind of deep learning model.

● Deepfacelab: This open-source software is the leading solution as deepfake generator. To obtain the maximum advantage and get more realistic results, the software requires the use of Adobe after Effects and Davinci Resolve. Beyond the changing of faces, age lifting and lips manipulations functions are supported.

● Xpression Camera: based on voice2face technology. Let to launch a realistic avatar in real time that copy users´ facial expressions. It can be used in streaming services such as Twitch, or in videoconferences.

● Faceswap: open-source software to generate deepfakes from an imported video.

● Omniverse Audio2Face: Developed by NVIDIA, this tool can animate a face (predetermined) from any audio path.

● Uberduck.ia: A very powerful audio deepfake tool that let to select between thousands of voices, such as celebrities, TV characters, YouTubers, TikTokers, etc., and insert a text, upload an audio file or record audio. There is a free version.

Concern about the malicious use of deepfakes has become a topic of interest for governments and organizations and research on the development of automatic detection methods is on the rise, being currently an important field for computer science researchers.

Following the surveys carried out by Tolosana et al., 2020 and Nguyen et al., 2022, we can highlight the following methods (cataloged according to the taxonomy proposed by Tolosana et al., 2020):

Photographic images: Created in their entirety, different proposals have been made, including the analysis of features of the GAN-pipeline such as the colour (McCloskey and Albright, 2018), steganalysis methods such as the pixel co-occurrence matrices (Nataraj et al., 2019), or the detection of fingerprints inserted by GAN architectures using pure deep learning methods such as convolutional traces (Guarnera et al., 2020).

Swap faces deepfakes: The following approaches for identification have been developed: detection of inconsistencies between lip movements and audio speech, features related to measures like signal-to-noise ratio, specularity, blurriness, etc; eye colour; missing reflections, and missing details in the eye and teeth areas. Analysis of facial expressions and head movements; eye blinking, blood flow or combined systems based on both facial expressions and head movements have also been proposed in the literature.

Attribution manipulation: Some authors propose to analyse the internal GAN pipeline in order to detect different artifacts between real and fake images. Many studies have also focused on pure deep learning methods, either feeding the networks with face patches or with the complete face. These systems provide results close to 100% accuracy due to the GAN fingerprint information present in fake images that are used by these systems. However, recent proposals found in the literature to remove GAN fingerprints from the fake images while keeping a very realistic appearance represent a challenge.

Expression swap detection based on deep learning approaches consider both spatial and motion information (3DNN, I3D and 3DResnet approaches); steganalysis and mesoscopic, visual effects; image and temporal information through recurrent convolutional network approaches or optical flow fields to exploit possible inter-frame dissimilarities due to fake images have unnatural optical flow due to unusual movement of lips, eyes...

Governments such as the US have focused efforts on the development of detection architecture. The Defence Advanced Research Project Agency (DARPA) has developed two systems: Media Forensics (MediaFor) y Semantic Forensics (SemaFor). Recently, Facebook Inc. with Microsoft Corp and the Partnership on AI coalition have launched the Deepfake Detection Challenge to improve the research and development in detecting and preventing deepfakes.

Despite all these efforts aimed at detection, there are important limitations, and, in practice, they take us to the field of cat-and-mouse games between hostile actors trying to overcome detection systems and detection architectural designers. While the aforementioned approaches can be effective, this accuracy is based on laboratory studies where the videos are discriminated from well-known datasets of deepfakes. There are therefore no guarantees of similar performance on new materials. Studies into detection evasion show that even simple modifications can drastically reduce the reliability of a detector. On the other hand, it should also be noted that in practice the deepfake videos that are uploaded to social networks are compressed, so the image quality would make detection difficult. Other preventive approaches that are being explored are adversarial attacks on deepfake algorithms, and the implementation of blockchain systems or distributed ledger technology (DLT) to leave a record of original audiovisual materials or watermarks within the audiovisual files.

However, it also warns of the vulnerabilities of these approaches such as attacks on the integrity of the DLT itself, or the dependence on technicians and organizations that will be responsible for operating the system, or the existence of a link between the registry and the recipient of the information. Another of the formulas that are presented as effective is the awareness of potential audiences through exposure to pre-bunking or inoculation techniques (van Huijstee et al., 2021, p. 41).

1. Abokhodair, N., Yoo, D., & McDonald, D. W. (2015). Dissecting a social botnet: Growth, content and influence in Twitter. Proceedings of the 18th ACM conference on computer supported cooperative work & social computing. ACM839–851.

2. Assenmacher, D.; Clever, L.; Frischlich, L.; Quandt, T.; Heike T., Heike; Grimme, C. (2020). “Demystifying Social Bots: On the Intelligence of Automated Social Media Actors”, Social Media + Society, July-September 2020: 1–14, /doi/10.1177/2056305120939264

3. Badawy, A.; Addawood, A.; Lerman, K.; and Ferrara, E. (2019). “Characterizing the 2016 russian ira influence campaign”, Social Network Analysis and Mining 9(1):31.

4. Badawy, A.; Ferrara, E.; and Lerman, K. 2018. “Analyzing the digital traces of political manipulation: The 2016 russian interference twitter campaign”. In ASONAM, 258–265.

5. Bastos, M. and Mercea, D. (2018). “The public accountability of social platforms: lessons from a study on bots and trolls in the Brexit campaign”, Phil. Trans. R. Soc. A 376: 20180003. http://dx.doi.org/10.1098/rsta.2018.0003

6. Bessi, A., and Ferrara, E. (2016). “Social bots distort the 2016 us presidential election online discussion”, First Monday, 21(11)

7. Broniatowski, D. A., Jamison, A. M., Qi, S., AlKulaib, L.; Chen, T.; Benton, A.; Quinn, S. C. and Dredze, M. (2018). “Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate”, American Journal of Public Health 108, (October 2018): 1378-1384.

8. Chavoshi, N., Hamooni, H., and Mueen, A. (2016). “Debot: Twitter bot detection via warped correlation”, ICDM817–822.

9. Cresci, S.; Petrocchi, M.; Spognardi, A.; Tognazzi, S. (2016). “Dna-inspired online behavioral modeling and its application to spambot detection”, IEEE Intelligent Systems, 31(5), 54-68

10. Cresci, S., Di Pietro, R., Petrocchi, M., Spognardi, A., & Tesconi, M. (2017). “Social fingerprinting: detection of spambot groups through DNA-inspired behavioral modeling”, IEEE Transactions on Dependable and Secure Computing, 15(4), 561-576. https://www.doi.ord./ 10.1109/TDSC.2017.2681672.

11. Cresci, S.; Petrocchi, M.; Spognardi, A. and Tognazzi, A. (2019). “On the capability of evolved spambots to evade detection via genetic engineering”, Online Social Networks and Media, 9, 1-16,, ISSN 2468-6964, https://doi.org/10.1016/j.osnem.2018.10.005.

12. Davis, C.A.; Varol, O.; Ferrara, E.; Flammini, A. and Menczer, A. (2016). “BotOrNot: A system to evaluate social bots”, In WWW companion. ACM

13. Ferrara, E. (2017). “Disinformation and social bot operations in the run up to the 2017 french presidential election”, First Monday 22(8).

14. Ferrara, E.; Varol, O.; Menczer, F.; and Flammini, A. (2016). “Detection of promoted social media campaigns”, In Tenth International AAAI Conference on Web and Social Media, 563–566.

15. Goga, O., Venkatadri, G., and Gummadi, K. P. (2015). “The doppelgänger bot attack: Exploring identity impersonation in online social networks”, Proceedings of the 2015 internet measurement conference. ACM141–153.

16. Guarnera, L.; Giudice, O. and Battiato, S. (2020). “DeepFake Detection by Analyzing Convolutional Traces,” in Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops.

17. van Huijstee, M.; van Boheemen, P.; Das, D.; Nierling, L.; Jahnel, J.; Karaboga, M., and Fatun, M. (2021). “Tackling deepfakes in European policy”, Study Panel for the future of Science and Technology, European Parliamentary Research Service.

18. Johansen, A. G. (2022). “What’s a Twitter bot and how to spot one.” Norton Emergency Trends, September 5, 2022.

19. Luceri, L.; Deb, A.; Giordano, S.; and Ferrara, E. (2019). “Evolution of bot and human behaviour during elections”; First Monday 24(9).

20. McCloskey, S. and Albright, M. (2018). “Detecting GAN-Generated Imagery Using Color Cues,” arXiv preprint arXiv:1812.08247.

21. Morstatter F.; Wu, L.; Nazer, T.; H, Carley K. and Liu, H. (2016) “A new approach to bot detection: Striking the balance between precision and recall.” In: IEEE/ACM Conference on Advances in Social Networks Analysis and Mining (ASONAM), 533–540.

22. Nataraj, L; Mohammed, T.; Manjunath, B.; Chandrasekaran, S.; Flenner, A.; Bappy, J. and Roy-Chowdhury, A. (2019) “Detecting GAN Generated Fake Images Using Co-Occurrence Matrices,” Electronic Imaging, 5, 1–7

23. Nightingale, S. J. and Farid, H. (2022). “AI-synthesized faces are indistinguishable from realfaces and more trustworthy”, PNAS, 2019(8), e2120481119 https://doi.org/10.1073/pnas.2120481119

24. Orabi, M.; Mouheb, D.; Al Aghbari, Z.; Kamel, I. (2020). “Detection of bots in social media: A systematic review”, Information Processing & Management, 57 (4), 102250. https://doi.org/10.1016/j.ipm.2020.102250.

25. Sedova, K.; McNeill, C.; Johnson, A.; Joshi, A. and Wulkan, I. (2021). “AI and the Future of Disinformation Campaigns Part 2: A Threat Model”, CSET Policy Brief CSE https://cset.georgetown.edu/publication/truth-lies-and-automation/

26. Tolosana, R.; Vera-Rodriguez, R.; Fierrez, J.; Morales, A. and Ortega-Garcia, J. (2020). “DeepFakes and Beyond: A Survey of Face Manipulation and Fake Detection”, https://arxiv.org/pdf/2001.00179.pdf

27. Uyheng, J., Carley, K.M. (2020). “Bots and online hate during the COVID-19 pandemic: case studies in the United States and the Philippines”, J Comput Soc Sc 3, 445–468 https://doi.org/10.1007/s42001-020-00087-4